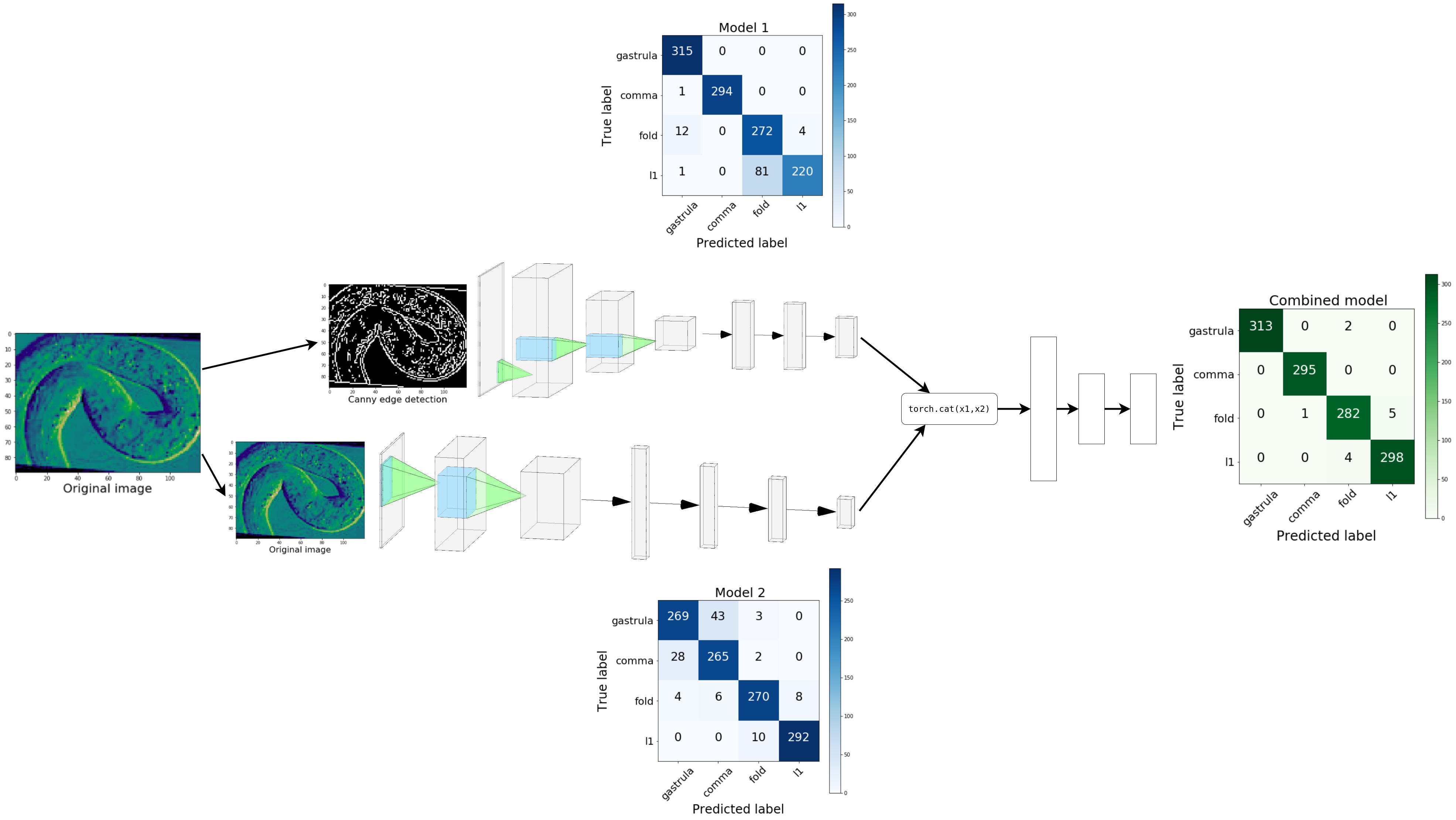

Parallel CNNs work just as good as they look

But why use them anyways ?

- Because when two different architectures are trained on the same training set, they don’t have the same weaknesses (i.e different confusion matrices)

- This means that when both are combined, they tend to neutralise each other’s weaknesses, which gives us a boost in accuracy.

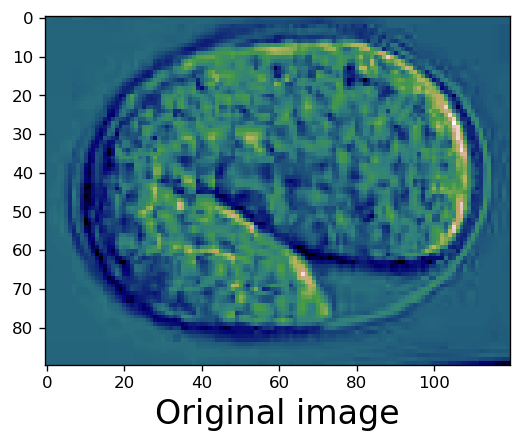

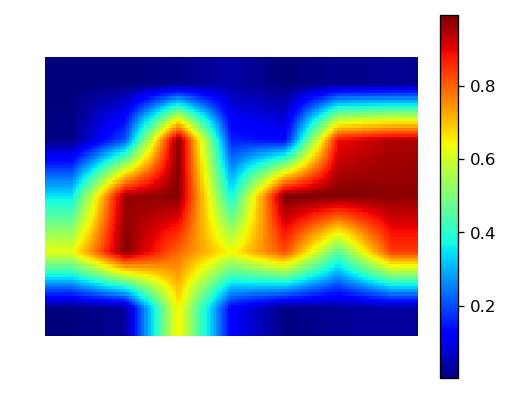

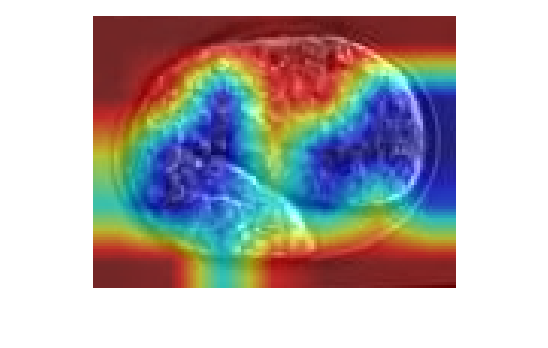

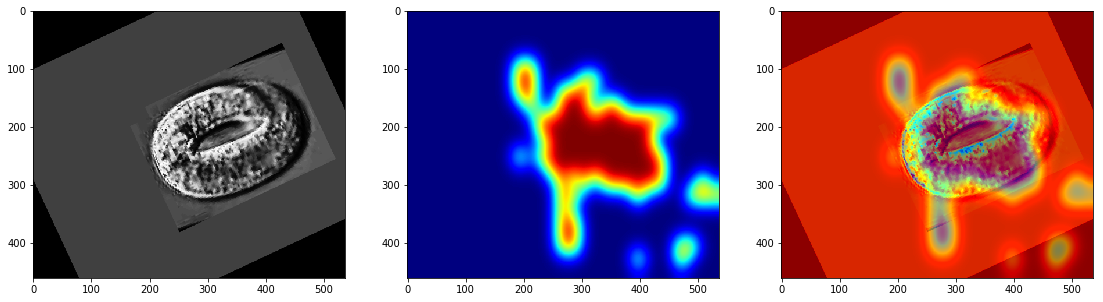

Class activation heatmaps on deep neural networks

-

Shows the regions of the image which gave the most activations for a certain label in a trained classification model

-

In simpler words, it tells us about the regions of the image which made the model decide that it belongs to a certain label “x”

- And when the heatmap is superimposed upon the real image, it gives us an insight on how the CNN “looked” at the image